Translating Earbud

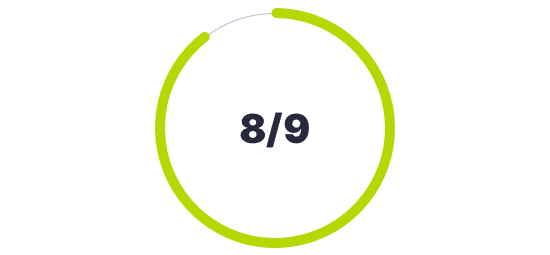

Technology Life Cycle

Marked by a rapid increase in technology adoption and market expansion. Innovations are refined, production costs decrease, and the technology gains widespread acceptance and use.

Technology Readiness Level (TRL)

Technology is developed and qualified. It is readily available for implementation but the market is not entirely familiar with the technology.

Technology Diffusion

Adopts technologies once they are proven by Early Adopters. They prefer technologies that are well established and reliable.

A wireless earbud technology that allows users to translate spoken words from different languages to the user's language of preference. The oral inputs pass through the cloud or offline, where the hardware processes the messages through speech recognition, machine translation, and speech synthesis.

Recently, neural machine translation, an approach inspired by the human brain, has significantly improved the quality and accuracy of translations, approaching human performance for written text. Neural machine translation performs the analysis in two stages; encoding and decoding. In the encoding stage, source language text is fed into the machine then transformed into a series of linguistic vectors. The decoding stage transfers these vectors into the target language.

Some large tech companies are working on real-time translation devices, and the competitive market would likely lead to rapid improvements in the quality and practicality of these products. Some applications that integrate real-time translation for refugees are already being developed. These apps are tailored to the problems migrants face and include additional features such as crowd-sourced legal document review and automatic filtering of fake news and extremist propaganda.

Future Perspectives

Beyond human-to-human translation, we could envision a universal translator that translates code language to natural language, allowing machines to be programmed without requiring user coding knowledge. This feature could be useful in several industries. A worker in a factory could program a machine by literally talking to it, enabling an intuitive collaboration between humans and machines.

While this technology is promising, language acquisition comes with many benefits, ranging from improved memory and mental flexibility to increased creativity and improved prioritization skills. Losing these skills may be a price worth paying for a future in which language barriers could effectively be broken down. By bridging the gap between individuals that speak different languages, translation earbuds would make it much easier for people to understand the perspective of those from across the world, possibly even challenging the role of English as the worldwide lingua franca.

In The Hitchhiker’s Guide to the Galaxy, writer Douglas Adams describes a “small, yellow, leech-like creature” called the Babel fish. This animal “feeds on brain-wave energy, absorbing all unconscious frequencies and then excreting telepathically a matrix formed from the conscious frequencies and nerve signals picked up from the speech centers of the brain, the practical upshot of which is that if you stick one in your ear, you can instantly understand anything said to you in any form of language.” New brain-machine interfaces with neuromorphic chips could allow us to create a silicon-based Babel fish sooner than we might expect. In this sense, translating earbuds could, at some point, be connected to XR glasses cameras to translate sign language into words.

There is one core difference between Douglas Adams’ Babel fish and current technologies: the Babel fish was “mindbogglingly useful” because it could not only translate vocabulary but interpret the integrated cultural nuances as well. Languages are ever-changing and alive structures, and there are many sociolects and dialects spoken within the same language. Therefore, this technology must incorporate the many layers embedded in one language, such as slang and sociolects, and not only follow the regulated standards of speaking, the ones accepted by language academies.

A way around this issue would be to create plugins for specific dialects and sociolects. Still, even in this scenario, the plug-ins would be the exception that would only serve to emphasize the existence of a "standard" tone. Celebrities, for instance, could create plugins with their slang package and their voice so that everyone could sound like their chosen idol.

Image generated by Envisioning using Midjourney